from scipy.signal import lfilter

from scipy.fftpack import fft, ifft

%matplotlib inline

Filtering¶

General relations for cross-correlations¶

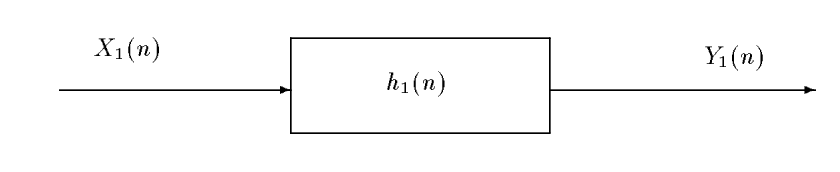

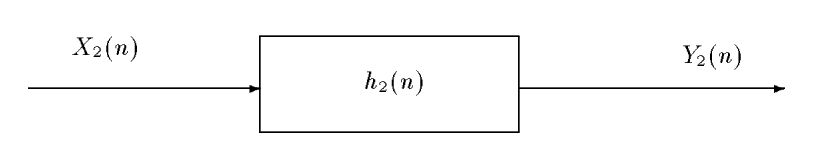

We consider a situation where we want to study the correlations between the different inputs and outputs of a pair of two filters: $$ \left{

\begin{array}{lcr} Y_1(n,\omega) & = & (X_1*h_1)(n,\omega),\\ Y_2(n,\omega) & = & (X_2*h_2)(n,\omega), \end{array}\right. $$

Let us compute the intercorrelation between $Y_1(n)$ and $Y_2(n)$ : $$ R_{Y_1Y_2}(m) = \E{Y_1(n,\omega)Y_2^*(n-m,\omega))} = \E{(X_1*h_1)(n,\omega))(X_2^* *h_2^*)(n-m,\omega))}. $$ The two convolution products are \begin{eqnarray*} (X_1*h_1)(n,\omega)) & = & \sum_u X_1(u,\omega))h_1(n-u),\\ (X_2*h_2)(n-m,\omega))& = & \sum_v X_2(v,\omega))h_2(n-m-v), \end{eqnarray*} and \begin{eqnarray*} R_{Y_1Y_2}(m) & = & \E{\sum_u X_1(n-u,\omega))h_1(u)\sum_v X_2^*(n-m-v,\omega))h_2^*(v)}\\ & = & \E{\sum_u \sum_v X_1(n-u)h_1(u) X_2^*(n-m-v)h_2^*(v)}\\ & = & \sum_u \sum_v h_1(u) R_{X_1X_2}(m+v-u) h_2^*(v). \end{eqnarray*} Looking at the sum over $u$, we recognize a convolution product between $h_1$ and $R_{X_1X_2}$, expressed at time $(m+v)$ : \begin{eqnarray*} R_{Y_1Y_2}(m) & = & \sum_v (h_1 * R_{X_1X_2})(m+v) h_2^*(v)\\ & = & \sum_v (h_1 * R_{X_1X_2})(m+v) h_2^{(-)*}(-v), \end{eqnarray*} where we have noted $h_2^{(-)}(v) = h_2(-v)$. In this last relation, we recognize anoter convolution product, this time between $(h_1 * R_{X_1X_2})$ and $h_2^{*(-)}$ : \begin{eqnarray*} R_{Y_1Y_2}(m) & = & \sum_v (h_1 * R_{X_1X_2})(m+v) h_2^{*(-)}(-v)\\ & = & \sum_{v'}(h_1 * R_{X_1X_2})(m-v') h_2^{*(-)}(v') \\ & = & \left( h_1 * R_{X_1X_2} * h_2^{*(-)} \right) (m). \end{eqnarray*}

We finally obtain the important formula: $$ \eqboxc{R_{Y_1Y_2}(m) = \left( h_1 * R_{X_1X_2} * h_2^{*(-)}\right) (m)}. $$

By-products¶

- [Autocorrelation of the output of a filter] With a single filter we can apply the previous formula, with $$ \left{

\right. $$ Of course $Y_1=Y_2=Y$, and $$ \eqboxb{R{YY}(m) = \left( h * R{XX} h^{(-)}\right) (m)}. $$

- [Cross correlation between output and input] We want to measure the correlation between the input and output of a filter. Toward this goal, we consider $$ \left{

\right. $$ In this case, we got $$ \eqboxb{R{YX}(m) = \left( h * R{XX}\right) (m)}. $$ The cross correlation between the output and the input of filter has the very same apparence as the filtering which links the output to the input.

Examples¶

We study now the filtering of random signals. We begin with the classical impulse response $h(n) = a^n$, with $x(n)$ a uniform distributed white noise at the input, and we denote $y(n)$ the output.

Filter the signal $x(n)$, with the help of the function

lfilter. Compare the input and output signals, and in particular their variations. Compare the histograms. Look at the Fourier transform of the output. Do this for several values of $a$, beginning with $a=0.9$.Using the function

xcorr(import it viafrom correlation import xcorr), compute all the possible correlations between the input and the output. What would be the correlation matrix associated with the signal $x(n) = [x(n)~ y(n)]^t$? Compare the impulse response $h$ to the cross-correlation $Ryx(k)$. Explain. Experiment by varying the number of samples $N$ and $a$ (including its sign).Consider the identification of the impulse response by cross-correlation, as above, but in the noisy case. Add a Gaussian noise to the output and compute the cross-correlation. Observe, comment and experiment with the parameters.

The filtering is done thanks to the function lfilter. We have first to import it, eg as

from scipy.signal import lfilter

We will also need to play with ffts so it is a good time to import it from fftpack

from scipy.fft import fft, ifftN = 1000 #Number of samples

x = stats.uniform(-0.5, 1).rvs(N)

a = 0.9

# Filtering and plots...

# FILL IN...

y = lfilter([1], [1, -a], x)

figure(figsize=(8, 3))

plot(x)

xlabel("Time")

title("Initial signal")

figure(figsize=(8, 3))

plot(y)

xlabel("Time")

title("Filtered signal")

We see that the output has slower variations than the input. This is the result of the filtering operation. Let us now look at the histograms:

#Histograms

# FILL IN

# Histograms

figure(figsize=(8, 3))

plt.hist(x, bins=20, rwidth=0.95)

plt.xlabel("Amplitude")

plt.title("Initial signal")

figure(figsize=(8, 3))

plt.hist(y, bins=20, rwidth=0.95)

plt.xlabel("Amplitude")

plt.title("Filtered signal")

While the initial signal is uniformly distributed, the histogram of the output looks like the histogram of a gaussian. Actually, this is related to the central limit theorem: the mixture of iid variables tends to a gaussian. This also explains th emodification of the amplitudes observed on the time signal.

Let us finally look at the Fourier transform:

#FILL IN

f = arange(N) / N - 0.5

fig, ax = subplots(2, 1, figsize=(7, 5))

ax[0].plot(f, abs(fftshift(fft(x))))

ax[0].set_title("Fourier transform of the input")

ax[0].set_xlabel("Frequency")

ax[0].axis('tight') #Tight layout of the axis

ax[1].plot(f, abs(fftshift(fft(y))))

ax[1].set_title("Fourier transform of the output")

ax[1].set_xlabel("Frequency")

fig.tight_layout()

ax[1].axis('tight')

Recall how the transfer function changes with $a$:

figure(figsize=(8, 3))

d = zeros(N)

d[0] = 1

for a in (-0.95, -0.8, -0.4, 0.4, 0.8, 0.9):

h = lfilter([1], [1, -a], d)

H = fft(h)

plot(f, abs(fftshift(H)), label='a={}'.format(a))

legend()

axis('tight')

_ = xlabel("Frequency")

Correlation matrix¶

from correlation import xcorr

N = 1000 #Number of samples

x = stats.uniform(-0.5, 1).rvs(N)

a = 0.8

y = lfilter([1], [1, -a], x)

L = 30

Rxx, lags = xcorr(x, x, maxlags=L)

Rxy, lags = xcorr(x, y, maxlags=L)

Ryx, lags = xcorr(y, x, maxlags=L)

Ryy, lags = xcorr(y, y, maxlags=L)

fig, ax = subplots(2, 2, figsize=(7, 5))

axf = ax.flatten()

Rtitles = ('Rxx', 'Rxy', 'Ryx', 'Ryy')

for k, z in enumerate((Rxx, Rxy, Ryx, Ryy)):

axf[k].plot(lags, z)

axf[k].set_title(Rtitles[k])

fig.tight_layout()

We have represented above all the possible correlations between the input and the ouput. This representation corresponds to the correlation matrix of the vector $z(n)=[x(n) ~~ y(n)]^H$ that would give $$ \mathbb{E}\left\{ \left[ \begin{array}[c]{c} x(n)\\ y(n) \end{array}\right]\left[ \begin{array}[c]{cc} x(n-k)^{*} & y(n-k)^{*}\end{array}\right]\right\} =\left[ \begin{array}[c]{cc} R_{xx}(k) & R_{xy}(k)\\ R_{yx}(k) & R_{yy}(k) \end{array}\right] $$

Identification of a filter by cross-correlation¶

We know that the cross-correlation of the output of a system with IR $h$ with its input is given by $$ R_{yx}(k)=\left(R_{xx}*h\right)(k). $$ When the input is a white noise, then its autocorrelation $R_{xx}(k)$ is a Dirac impulse with weight $\sigma_x^2$, $R_{xx}(k)=\sigma_x^2\delta(k)$, and $R_{yx}$ is proportional to the impulse response: $$ R_{yx}(k)=\sigma_x^2 h(k). $$ This is what we observe here:

# An object "uniform random variable" with fixed bounds [0,1]

from correlation import xcorr

x_uni = stats.uniform(loc=0, scale=1)

(m, v) = x_uni.stats(moments='mv')

print("Uniform distribution: ",

"Value of the mean : {0:2.3f} and of the variance {1:2.3f}".format(

float(m), float(v)))

N = 1000 #Number of samples

x = stats.uniform(-0.5, 1).rvs(N) #generates N values for x

a = 0.8

y = lfilter([1], [1, -a], x) #Computes the output of the system

L = 30

Ryx, lags = xcorr(y, x, maxlags=L) #then the cross-correlation

d = zeros(N)

d[0] = 1

h = lfilter([1], [1, -a], d) #and the impulse response

plot(arange(L), Ryx[arange(L, L + L)], label="Intercorrelation $R_{yx}(k)$")

plot(arange(L), v * h[arange(L)], label="Impulse response $h(k)$")

xlabel("Lags $k$")

grid(True)

legend()

In the noisy case, the same kind of observations hold. Indeed, if $z$ is a corrupted version of $y$, with $z(n)=y(n)+w(n)$, then $$ R_{zx}(k)=R_{yx}(k)+R_{wx}(k)=R_{yx}(k) $$ provided that $x$ and $w$ are uncorrelated, which is reasonable assumption.

N = 1000

#Remember that the variance of $x$ is given by

x_uni = stats.uniform(-0.5, 1)

(m, v) = x_uni.stats(moments='mv')

print("Uniform distribution: ",

"Value of the mean : {0:2.3f} and of the variance {1:2.3f}".format(

float(m), float(v)))

N = 1000 #Number of samples

x = stats.uniform(-0.5, 1).rvs(N) #generates N values for x

a = 0.8

y = lfilter([1], [1, -a], x) #Computes the output of the system

w = stats.norm(0, 1).rvs(N) #Gaussian noise

y = y + 0.5 * w

L = 50

Ryx, lags = xcorr(y, x, maxlags=L) #then the cross-correlation

d = zeros(N)

d[0] = 1

h = lfilter([1], [1, -a], d) #and the impulse response

plot(arange(L), Ryx[arange(L, L + L)], label="Intercorrelation $R_{yx}(k)$")

plot(arange(L), v * h[arange(L)], label="Impulse response $h(k)$")

xlabel("Lags $k$")

grid(True)

legend()

while the direct measure of the impulse response would give

plot(

arange(N), h + 0.5 * w, label="Direct measurement of the impulse response")

plot(arange(N), h, label="True impulse response")

xlim([0, 30])

_ = legend()

Hence, we see that identification of a system is possible by cross-correlation, even when dealing with noisy outputs. Such identification would be impossible by direct measurement of the IR, because of the presence of noise.