Image Retrieval#

After training the network to embed image features based on their dominant colors, you will assess whether the learned embedding space effectively groups images by color similarity. The evaluation should be organized into two parts, explained below. As a preliminary step, you will need to compute the embeddings for all images in the dataset (both training and test sets) using the trained model. It is assumed in the following sections that the embeddings have been computed.

Part 1: Image-to-Image Retrieval#

In this setup, a subset of images from the test set is used as queries to retrieve visually similar images from the entire dataset.

Select a set of query images from the test set.

For each query image:

Compute the distance between its embedding and the embeddings of all images in the dataset.

Rank the dataset images based on distance scores.

Visualize the top-5 most similar images.

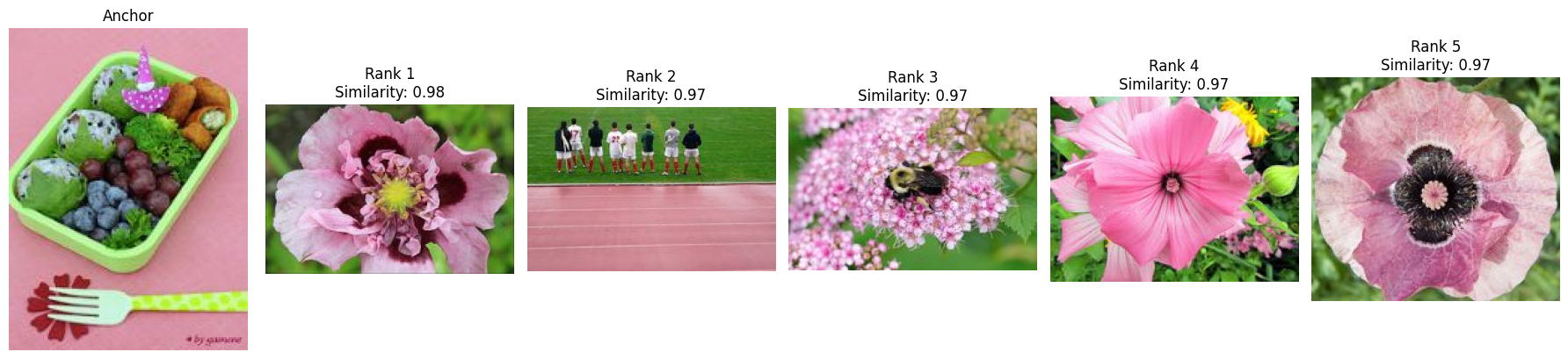

You should obtain something like the following figure.

Duplicate Images#

In the dataset, there are some duplicate images. You should remove them when visualizing the top-5 most similar images. That is, you should never include the same image more than once in the top-5 results. You can use the imagehash library to compute a hash of the image and check for duplicates. The following code snippet shows how to do this.

from PIL.Image import Image # uv add pillow --OR-- pip install pillow

import imagehash # uv add imagehash --OR-- pip install imagehash

def similar_images(image1: Image, image2: Image, threshold: int = 5) -> bool:

"""

Compare two images using perceptual hashing.

"""

hash_function = imagehash.phash

hash1 = hash_function(image1)

hash2 = hash_function(image2)

diff = hash1 - hash2

# If the difference is small, consider them similar.

return diff < threshold

Part 2: Palette-to-Image Retrieval#

In this setup, a synthetic color palette image is used as a query to retrieve images from the dataset.

Randomly select 1–3 colors from the predefined color palette.

Construct a random image filled with pixels of the selected colors.

Prepare the query image:

Apply MobileNetV3 preprocessing.

Extract features using the pretrained MobileNetV3 (convolutional backbone + global pooling).

Pass the flattened features through the trained model to obtain a query embedding.

Compute the distance between the query embedding and all image embeddings in the dataset.

Rank the dataset images based on distance scores.

Visualize the top-5 most similar images.

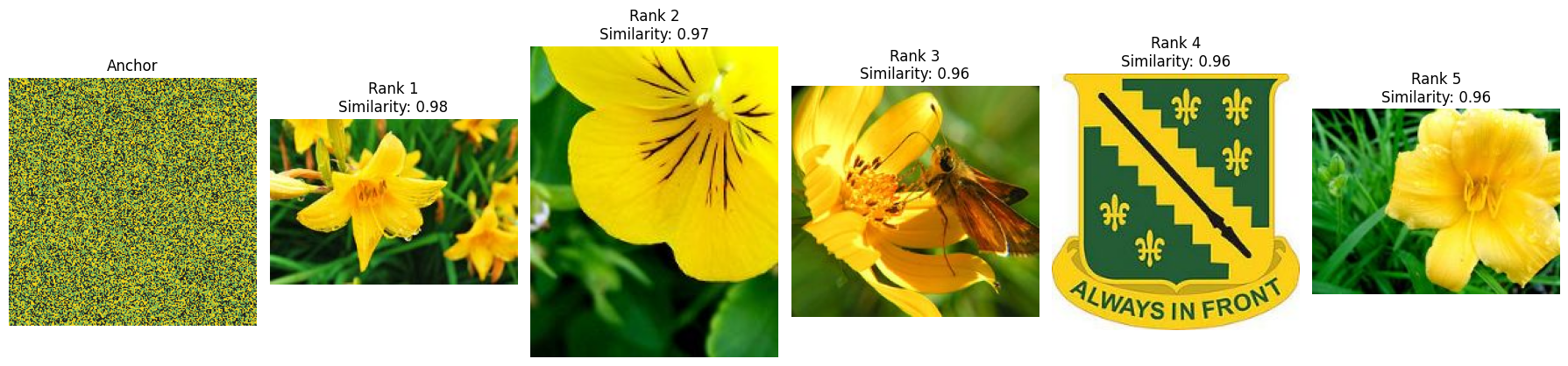

You should obtain something like the following figure.

Predefined Color Palette#

You can use the following code snippet to define the color palette, defined as a dictionary of RGB tuples.

palette = {

'Red': [222, 50, 60], # Red (de323c)

'Orange': [241, 136, 40], # Orange (f18828)

'Yellow': [241, 212, 28], # Yellow (f1d41c)

'Green': [111, 176, 88], # Green (6fb058)

'Cyan': [106, 187, 211], # Cyan (6abbd3)

'Blue': [67, 126, 177], # Blue (437eb1)

'Violet': [123, 95, 152], # Violet (7b5f98)

'Pink': [231, 166, 193], # Pink (e7a6c1)

'White': [255, 255, 255], # White (ffffff)

'Gray': [136, 136, 136], # Gray (888888)

'Black': [34, 34, 34] # Black (222222)

}