Linear algebra#

NumPy includes many functions for linear algebra in the numpy.linalg package.

import numpy as np

Multiplication#

There are several ways to multiply two vectors. The most natural way is the element-wise product. This works precisely how it sounds: multiply two vectors of the same size element-by-element.

In numpy, this operation is performed by the operator *.

x = np.array([2.1, -5.7, 13])

y = np.array([4.3, 9.2, 13])

p = x * y

print(p)

[ 9.03 -52.44 169. ]

Scalar product#

The scalar product is another way to multiply two vectors of the same dimension. Here is how it works: multiply the vectors element-by-element, then add up the result. For two generic column vectors \(\mathbf{x}\in\mathbb{R}^N\) and \(\mathbf{y}\in\mathbb{R}^N\), the scalar product is mathematically defined as

Numpy provides the operator @ and the function np.dot() to do exactly this. It works on arrays of any dimension.

dot = x @ y

print(" x:", x)

print(" y:", y)

print("x@y:", dot)

x: [ 2.1 -5.7 13. ]

y: [ 4.3 9.2 13. ]

x@y: 125.59

Matrix-vector product#

The operator @ is also capable of performing matrix-vector or vector-matrix products. Assume that \(A\in\mathbb{R}^{N\times K}\), \({\bf b}\in\mathbb{R}^{K}\), and \({\bf c}\in\mathbb{R}^{N}\):

Mathematically, the exact operation performed by @ depends on the order of inputs.

A @ bmultiplies the vector \({\bf b}\) by the rows of \(A\):

c @ Amultiplies the vector \({\bf c}\) by the columns of \(A\):

The result is a vector in both cases.

A = np.array([[1,3,1],[2,5,1]])

b = np.array([1, 1, 1])

c = np.array([1, 1])

Ab = A @ b

cA = c @ A

print("--- A ---")

print(A)

print("--- b ---")

print(b)

print()

print("A @ b:", Ab)

print("c @ A:", cA)

--- A ---

[[1 3 1]

[2 5 1]]

--- b ---

[1 1 1]

A @ b: [5 8]

c @ A: [3 8 2]

Matrix product#

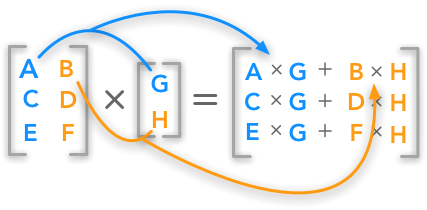

When both inputs are matrices, the operator @ performs a matrix multiplication. Specifically, given two matrices \(A\in\mathbb{R}^{M\times N}\) and \(B\in\mathbb{R}^{N\times K}\), their product is equal to the scalar product between the rows of \(A\) and the columns of \(B\):

X = np.array([[1,3,1],[2,5,1]])

Y = np.array([[5,1],[9,2],[14,1]])

Z = X @ Y

print("--- X ---")

print(X)

print("--- Y ---")

print(Y)

print("--- X @ Y ---")

print(Z)

--- X ---

[[1 3 1]

[2 5 1]]

--- Y ---

[[ 5 1]

[ 9 2]

[14 1]]

--- X @ Y ---

[[46 8]

[69 13]]