Dataset & DataLoader#

Code for processing data can get messy and hard to maintain. We ideally want our dataset code to be decoupled from our model training code for better readability and modularity. PyTorch provides two data primitives to help us manage data in a way that is easy to work with.

Datasetrepresents a collection of data samples and their corresponding labels.DataLoaderwraps an iterable around a Dataset object to enable batching, shuffling, etc.

PyTorch domain libraries provide a number of pre-loaded datasets that subclass Dataset. The TorchVision library specifically includes datasets for many computer vision tasks, such as MNIST, ImageNet, COCO, and much more. In this tutorial, we will use the MNIST dataset.

Show code cell source

import torch

from torch.utils.data import DataLoader

from torchvision.datasets import MNIST

from torchvision.transforms import v2

import matplotlib.pyplot as plt

Loading the dataset#

We load the MNIST dataset with the following parameters:

rootis the path where the train/test data is stored,trainspecifies training or test dataset,download=Truedownloads the data from the internet if it’s not available atroot,transformaccepts a function that transforms the images in the dataset as a preprocessing step.

preprocess = v2.Compose([v2.ToImage(), v2.ToDtype(torch.float32, scale=True)])

train_ds = MNIST('.data', train=True, download=True, transform=preprocess)

test_ds = MNIST('.data', train=False, download=True, transform=preprocess)

print('Train dataset size:', len(train_ds))

print(' Test dataset size:', len(test_ds))

Train dataset size: 60000

Test dataset size: 10000

The variable train_ds is a Dataset object that contains the images and the labels that the model will learn from. Similarly, test_ds contains the images that the model will be evaluated on.

Preprocessing#

Data does not always come in the final processed form that is required for training a model. The images in the MNIST dataset are in PIL Image format, and the labels are integers ranging from 0 to 9. For training, we need the images as normalized tensors. To make these transformations, we use the functions provided in the module torchvision.transforms.v2.

Hereabove, the MNIST Dataset was instructed to preprocess the images with the following transforms.

ToImage()- Convert a PIL Image, a NumPy array, or a PyTorch tensor to theImagetype, which is a subclass oftorch.Tensordefined in TorchVision to facilitate image processing.ToDtype()- Convert the input values to floats, and optionally normalize them to the range [0, 1].

Note

Every TorchVision Dataset includes two arguments, transform and target_transform, that accept functions to modify the samples and the labels, respectively.

Let’s take a look at a preprocessed image. We can index a Dataset object like a list to retrieve an image and the corresponding label.

image, label = train_ds[0]

Image:

- Type: <class 'torchvision.tv_tensors._image.Image'>

- Min/Max: 0.0 - 1.0

- Shape: 1 28 28

Label:

- Type: <class 'int'>

- Value: 5

Batching#

A Dataset retrieves the images and labels one sample at a time. While training a model, we typically want to pass samples in “batches” and reshuffle the data at every epoch. The DataLoader class abstracts this complexity for us in an easy API. It takes a Dataset object as an argument and provides a Python iterable over the dataset with support for automatic batching, multi-process data loading and many more features. We can configure a DataLoader with the following input arguments (look here for the full list).

batch_size: Number of samples to load per batch. Default is 1.shuffle: If True, the data is reshuffled at every epoch. This is important for training.num_workers: Number of subprocesses to use for data loading. The default, 0, means that the data will be loaded in the main process, which can slow down training for datasets where loading a sample takes a considerable amount of time (e.g., large images). For tiny datasets, 0 workers are usually faster.pin_memory: If True, the DataLoader will copy Tensors into the GPU pinned memory before returning them. This can save some time for loading data on the GPU. Usually a good practice to use for a training set, but not necessarily for validation and test to save memory on the GPU.drop_last: If True, the last batch is dropped in case it is smaller than the specified batch size. This occurs when the dataset size is not a multiple of the batch size. Only potentially helpful during training to keep a consistent batch size.

Here we define a batch size of 64. As a result, each batch fetched by the dataloader will be a tensor of 64 images and a tensor of 64 labels.

train_loader = DataLoader(train_ds, batch_size=64, shuffle=True)

test_loader = DataLoader(test_ds, batch_size=64)

Let’s retrieve the first batch of training samples.

for images, labels in train_loader:

print("Image Batch Shape:", *images.shape)

print("Label Batch Shape:", *labels.shape)

break # without this, it will print for all batches

Image Batch Shape: 64 1 28 28

Label Batch Shape: 64

Note that the images and the labels are stacked along the first dimension (axis=0).

Important

In a tensor holding a batch of samples, the leading dimension (axis 0) conventionally corresponds to the sample index. Think of it as the axis that keeps track of the samples in a batch.

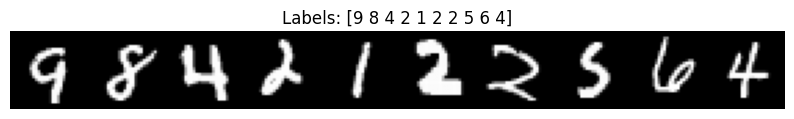

To better understand the data, we can visualize some of the images and labels in the first batch.

Show code cell source

n = 10

side_by_side = torch.cat([img[0] for img in images[:n]], dim=1)

plt.figure(figsize=(10,5))

plt.imshow(side_by_side, cmap='gray')

plt.title("Labels: " + str(labels[:n].numpy()))

plt.axis('off')

plt.show()

Summary#

In this tutorial, we learned how to load a dataset, preprocess the data, and divide it into batches. These are the basic steps that are required to prepare data for training a neural network in PyTorch. In the next tutorial, we will learn how to define a neural network.