Image retrieval#

Once the model is trained, the retrieval process involves the following steps.

Compute the embedding of all the images in the retrieval set.

Compute the embedding of a query attributes vector.

Compute the distances between the query and all the retrieval set images.

Get the closest images to the query based on the computed distances.

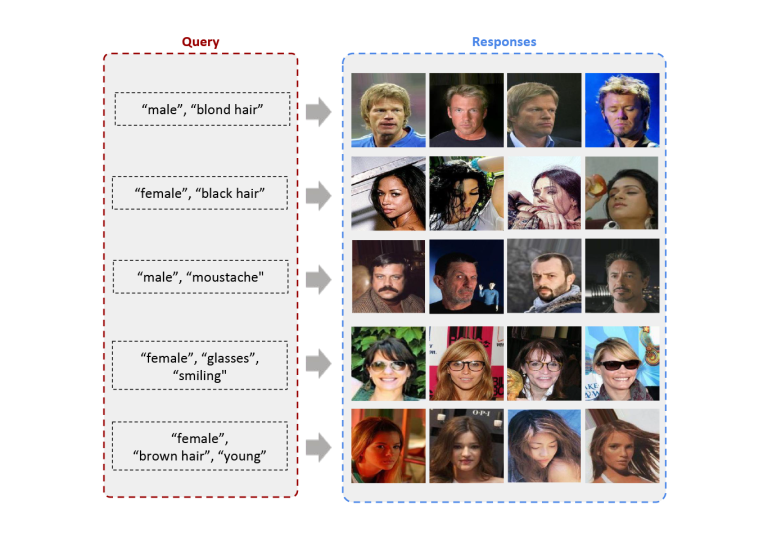

The figure below shows some example retrieval results. The query is a list of attributes, and the model retrieves images that match these attributes.

Evaluation metrics#

To evaluate the performance of the image retrieval system, you need to check if the retrieved images for a given query contain the desired attributes. This operation is done on a set of queries and images that were never used during training. There are different metrics used in the literature to evaluate retrieval systems.

Precision@K: The proportion of images correctly retrieved in the top K results. For example, if you retrieve K = 5 images and 3 of them are relevant to the query, the Precision@5 is 3/5 = 0.6. This metric focuses on the accuracy of the top K retrieved items, but does not consider whether the retrieval system finds all relevant results. A high Precision@K means that most of the top K retrieved results are relevant.

Recall@K: The proportion of images correctly retrieved in the top K results out of all relevant images in the retrieval set. For example, if there are 10 relevant images in the dataset, and our system retrieves 3 relevant images in the top K = 5 results, the Recall@5 is 3/10 = 0.3. This metric focuses on the ability of the retrieval system to find all relevant results, but does not penalize irrelevant items in the top K. A high Recall@K means that the retrieval system successfully finds a large fraction of all relevant items.

Mean Average Precision (mAP): The average of Precision@K values for K = 1, 2, …, N, where N is the total number of relevant images in the retrieval set. This metric is more expensive to compute and less intuitive, but it evaluates a retrieval system deeply.