Evaluation#

After training, you should evaluate the model on a separate test set to measure its performance. Since the task involves both detecting the presence of an object and predicting its location, you will assess your model on two aspects: classification accuracy and localization quality Importantly, all test images must undergo the same preprocessing and feature extraction steps applied to the training set. This ensures consistent input and meaningful results.

Classification (binary)#

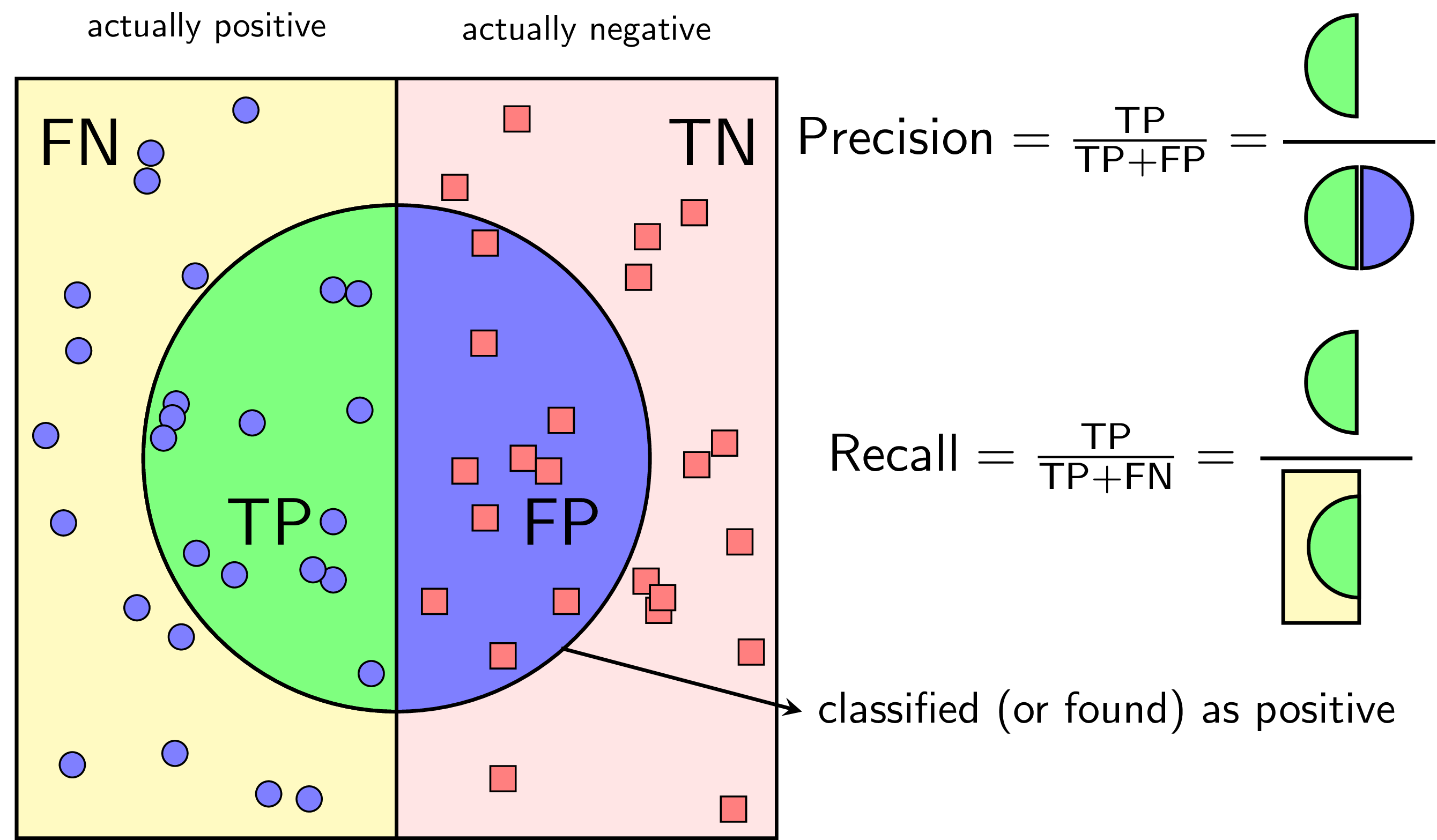

Classification evaluation measures how well the model predicts whether an object of the target category is present in an image. Metrics commonly used include the following.

Accuracy: The proportion of correctly classified images.

Precision: The fraction of predicted positives that are actually correct.

Recall: The fraction of actual positives correctly detected.

Precision-Recall Curve: A plot of precision versus recall at different classification thresholds. This is especially useful for imbalanced datasets.

The figure below visualizes the definitions of true positives (TP), true negatives (TN), false positives (FP), and false negatives (FN). The metrics can be computed from these values.

Localization#

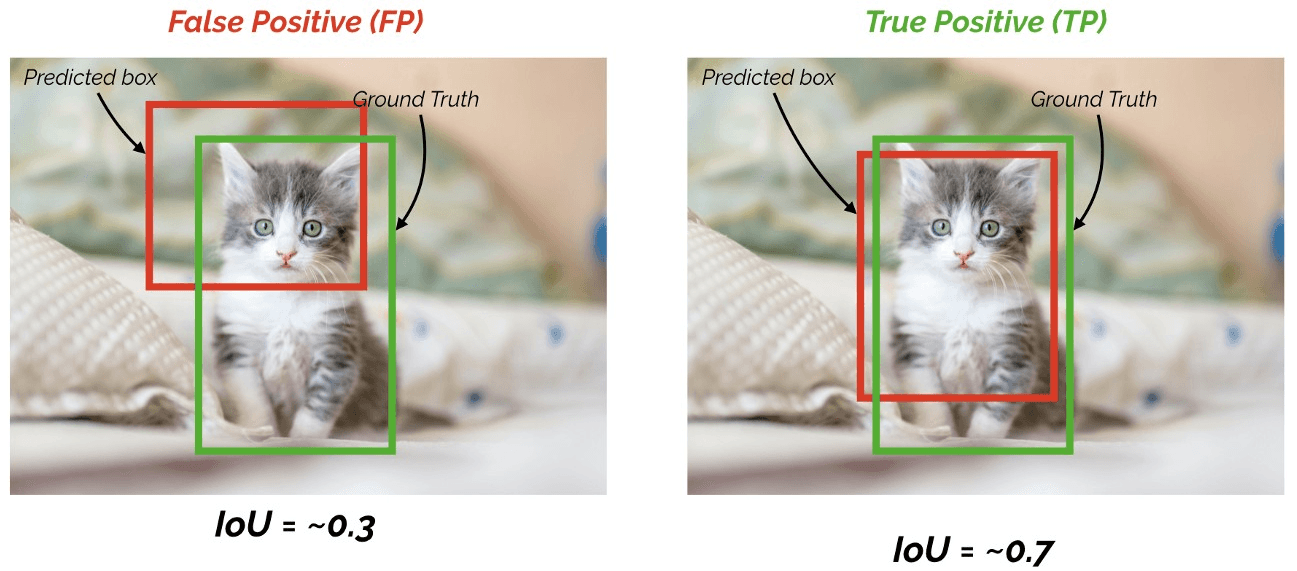

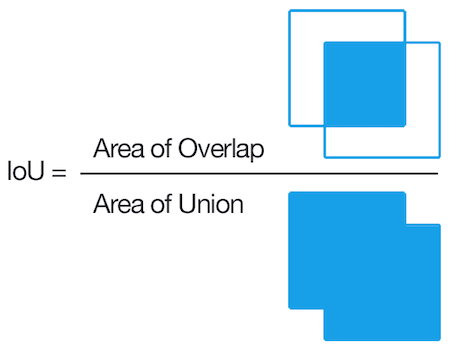

To evaluate how accurately the model predicts bounding boxes, the Intersection over Union (IoU) metric is used. IoU measures the ratio of the area of overlap between the predicted and ground-truth bounding boxes to the area of their union. A higher IoU indicates better localization performance.

Typically, an IoU threshold is set to determine whether a predicted bounding box is considered a true positive. The figure below illustrates this concept with an IoU threshold of 0.5.